As we wave farewell to 2023, a year full of innovations and the inevitable whirlwind of excitement around them, it’s vital to cut through the noise and uncover the actual value they can bring to the table.

The constant stream of updates, releases, and heightened interest in technologies like artificial intelligence, spatial computing, and the metaverse can be overwhelming. There’s rather a lot to keep up with – even when it’s your job!

We understand the challenges of staying ahead and so we wanted to create a handy guide for finding the value in emerging technologies for learning and development. We’re going to look at:

And we’ll give you some food for thought about how each technology can support your own use cases.

Before we dive into specifics, let’s take a look at the hype cycle. It’s a useful concept that frames why there can be such a buzz around new technology, which quickly becomes yesterday’s news. It relates to Amara’s Law, which says:

“We tend to overestimate the effect of new technology in the short-term and underestimate its effect in the long-term.”

Amara’s Law

It perfectly describes the very human tendency to assume that something new will revolutionise the world immediately – and, when this doesn’t happen, we lose focus just at the point that it will change things.

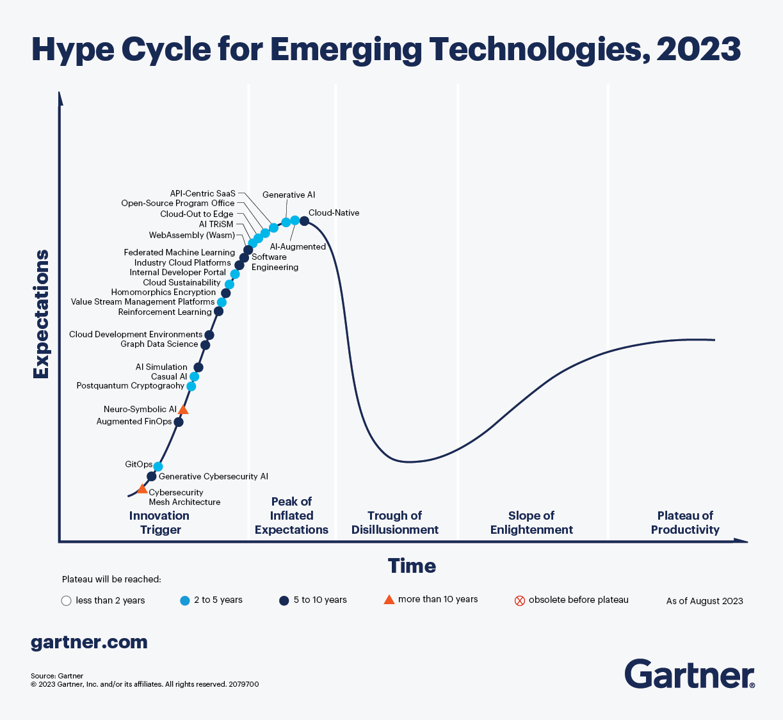

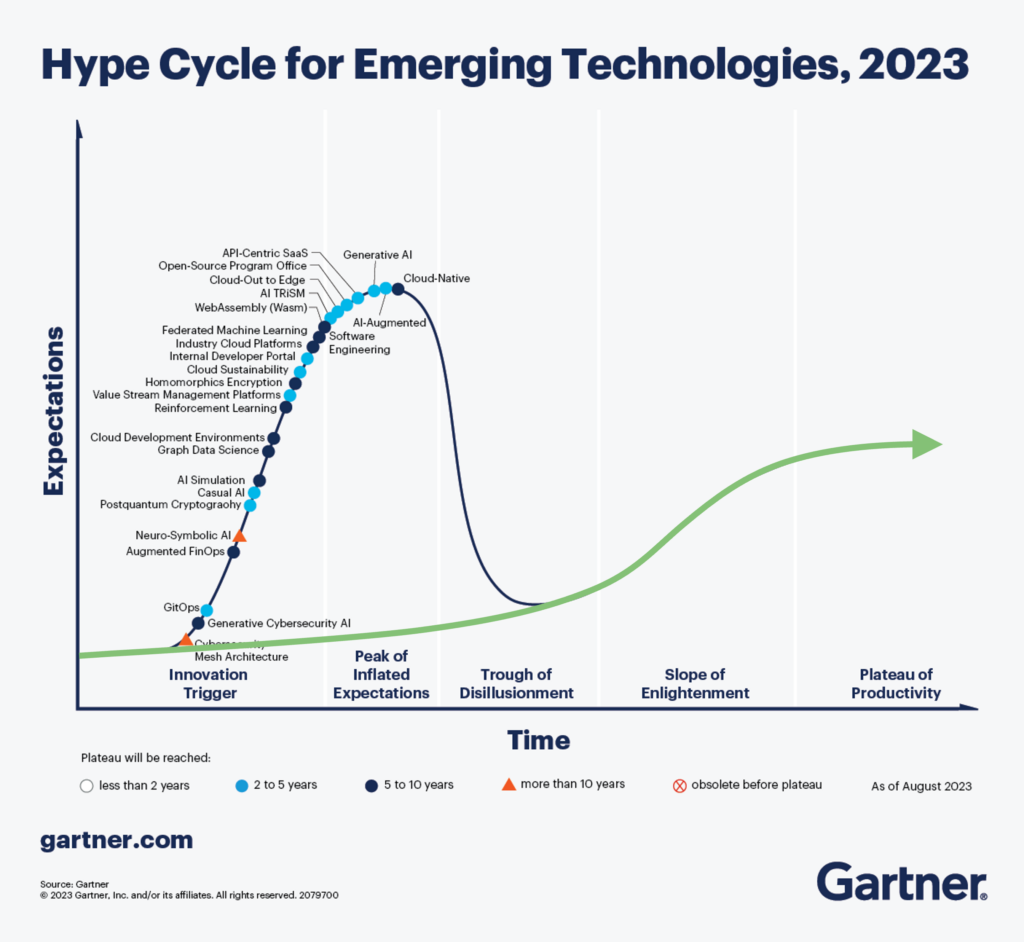

The Gartner Hype Cycle is another great way to consider hype and how technologies tend to start off being overhyped, before they enter the mainstream.

You may be familiar with the five stages that the cycle charts:

Now let’s take a look at the aforementioned technologies…

Headlines about AI in the past year or so have ranged from apocalyptic predictions of AI-driven killer robots, to optimistic promises of it finding sought-after cures:

Though it’s not all bad:

When it comes to using AI for learning and development, we’ve been experimenting with diagnostic and generative AI.

Generative AI offers ways to personalise learning experiences. It could take learner data and create bespoke content that matches a learner’s personal needs. Or it could assist learning designers in keeping materials up to date in ever-evolving fields, or create dynamic simulations that can offer different experiences each time a learner tries out a scenario.

Diagnostic AI analyses and diagnoses problems in various domains, and we’ve been experimenting specifically with emotional recognition AI. This kind of AI is already being used for use cases such as classroom engagement, market research testing, as well as in policing and border control.

For our experiments, we created an app that puts the learner at the centre, letting them practise having a tough conversation, with the AI analysing how they come across. The learner then takes this analysis to reflect on how they might adjust the way they handle the conversation.

Since the AI demo shown above, we’ve further expanded on it. Firstly, an AI creates a live transcript of what you say, which becomes a powerful tool, both for immediate feedback and later reflection.

A ChatGPT plugin then gives you feedback on the content of your message. So, you get personalised insight on how you’re coming across thanks to the diagnostic AI, combined with what you’ve actually said. A setup like this can analyse whether you’re using positive words, inclusive language, and so much more.

(If you’d like a deeper dive into AI, we recently covered the various types of AI and where they were as of the end of 2023 in our blog on diagnostic AI.)

What the metaverse is can still depend on who you ask, despite it coming into notoriety over two years ago now. (We did a little digging in a blog series back in November 2021).

Meta’s own vision for a metaverse is one that “will allow you to explore virtual 3D spaces where you can socialise, learn, collaborate and play”. And while cynicism is still high, there’s absolutely something to be said for spaces that can help us feel present with each other. That’s especially true as companies and colleagues continue to navigate remote, hybrid, and office work.

This is where we’ve seen the most interest among our clients. We’ve spent time creating digital twins of their offices and running metaverse literacy sessions with their learning teams. Though a simple recreation of an office space might not be the most useful endeavour, it provides a familiar gateway for these teams to get used to interacting in a virtual space.

We work with clients to get them familiar with the controls, since for those not used to gaming or virtual spaces, just moving around can be a skill in itself. They can try the spaces from a range of hardware – mobiles, desktops, headsets – to understand the different experiences. We help them explore the main functionality so they get an idea of how these communal spaces might be useful, and what barriers exist. In one case, we hosted over 600 participants across two hour-long session for a major financial business.

What our clients have found most valuable is just trying out these virtual environments for themselves. They may have spent plenty of time reading about the metaverse and its potential but there’s never anything quite like getting stuck in for yourself to understand the value for your use case.

However we might define the metaverse, there are three core features that are usually agreed upon:

And that means the metaverse has some great potential benefits for learning. We can consider the cultural intelligence hypothesis to understand why.

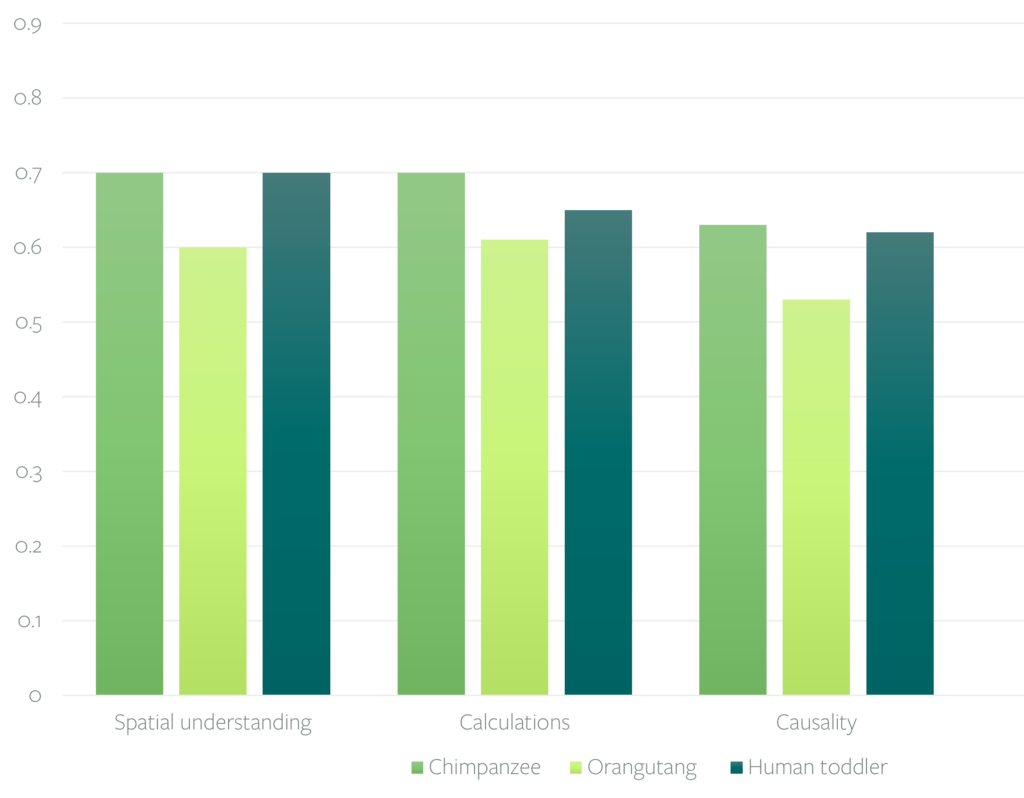

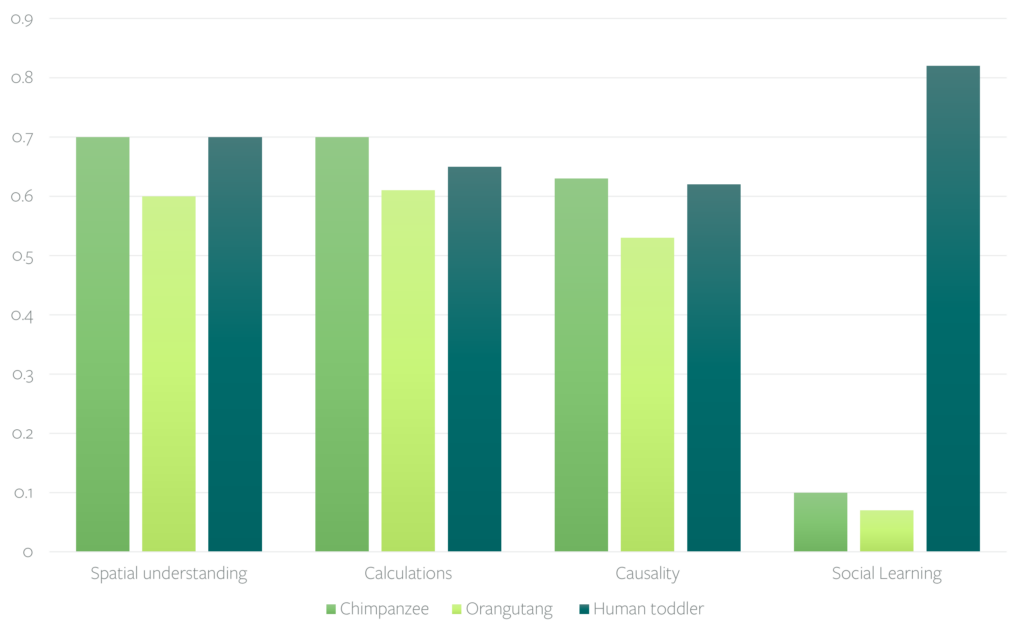

We’ve long thought that humans are the dominant species because of our ‘general intelligence’. A landmark study from E.Harmann et al took groups of our closest primate relatives – chimpanzees and orangutans – and set them cognitive tests alongside a group of 2.5-year-old toddlers.

But it turned out that across the areas of spatial understanding, calculations, and causality, the three groups scored about the same.

However, when it came to tasks that involved learning from another person, toddlers far outstripped primates.

In fact, they were about eight times more effective at learning from others: learning from others is our super power.

Rather than being more suited to understanding the physical world, we’re much better suited than other animals at understanding the social world.

That’s where we tend to be in our comfort zone, and why face-to-face learning was so often our default for training. In today’s world of remote and hybrid work, there’s a real hunger for new kinds of social learning approaches. (All this said, it’s still crucial to consider everyone’s needs in learning, and to recognise how neurodivergences might impact the way someone prefers to learn.)

Many of the learning experiences we’ve developed and delivered recently have been along this theme, with networked solutions that allow learners to collaborate and interact in a virtual space – from desktop games, to VR experiences, to a group augmented reality puzzle game, or a simulation blended into hybrid classroom sessions.

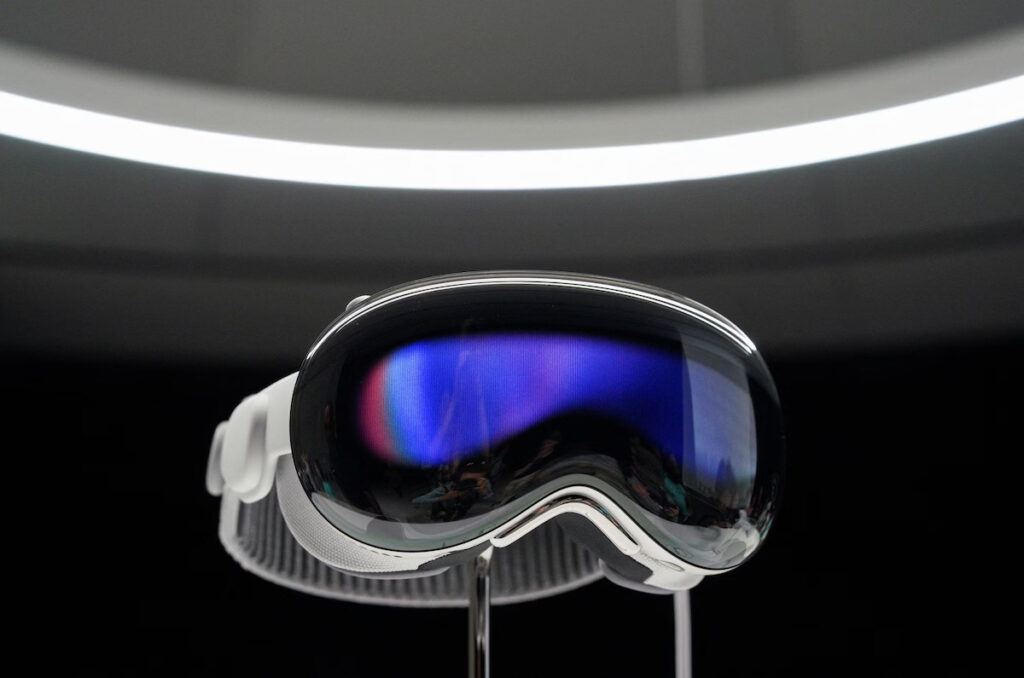

Although Apple brought the term spatial computing to the fore with the announcement of its Apple Vision Pro, it’s actually been around far longer. Back in 2003, Simon Greenwold defined it as ‘human interaction with a machine in which the machine retains and manipulates referents to real objects and spaces’.

More recently, definitions such as this from Cathy Hackl hold spatial computing as an umbrella encompassing technologies like virtual and augmented reality: “an evolving form of computing that blends our physical world and virtual experiences”.

Where Apple’s foray into head-mounted devices will lead remains to be seen. Will it be the killer device for learning and training? The must-have for entertainment and gaming? If nothing else, Apple is known for creating excellent user experiences out of technology that’s been around for a while.

In the meantime, we do know that spatial computing already has plenty of benefits to offer. Whether in manufacturing with augmented reality assistance or virtual prototyping, in training with virtual reality, the use cases are there and haven’t gone away.

It’s the hardware that’s continued to change and improve over the last few years. Eye-tracking and hand-tracking have come on leaps and bounds. The release of the Meta Quest 3 brings much-improved passthrough in a consumer device, opening up mixed reality to the wider public. You might think of us on a journey similar to the development of the first smartphones. Whether we’re closer to the Motorola DynaTac or iPhone is yet to be seen.

In truth, we know that there’s value to be found in spatial computing in terms of the tech it encompasses like virtual reality, augmented reality, mixed reality, and so on.

But its resurgence as a term reminds us:

And if you’re investigating where any of these technologies can help you meet your learning needs, we’re here to help you. Just get in touch!

We’re always happy to talk to you about how immersive technologies can engage your employees and customers. If you have a learning objective in mind, or simply want to know more about emerging technologies like VR, AR, or AI, send us a message and we’ll get back to you as soon as we can.