Kenes Group is one of the world’s leading Professional Conference Organisers (PCO) and the only global PCO to medical and scientific events. It’s hosted over 3,800 conferences in more than 100 cities worldwide. Alongside its extensive conference programme, Kenes offers Continuing Medical Education content, focussed on improving the delivery of medical education.

We worked with Kenes Group on an activity that gives healthcare practitioners (HCPs) a way to practise empathetic conversations with patients who have diabetes. Learners simulate a conversation with a fictional patient, and emotional recognition and generative AI provide feedback on how the learner is communicating with the patient. The focus is on tackling biases and stigma around weight in patients with diabetes.

We first met Kenes Group at Learning Technologies in 2023, where we were demonstrating our emotional recognition proof-of-concept. The demo invites users to practise delivering some bad news to a manager. It uses emotional recognition AI to feedback on how you’re coming across, giving you an opportunity to reflect on how you might adjust your delivery for future conversations. It’s an evolution of our work with Lloyds Banking Group on ‘Hear to Listen’, an activity that gives learners a safe space to practise talking to a colleague going through a tough time.

Kenes Group saw the potential for improving patient outcomes by using this kind of learning to help HCPs better handle sensitive conversations with their patients, particularly those living with diabetes and obesity.

Stereotypes about people with obesity lead to stigma and discrimination. When that stigma comes from healthcare practitioners, it can mean that people end up avoiding healthcare altogether. The challenge lay in overcoming biases and stigmas surrounding weight, so that practitioners would approach conversations with sensitivity and without judgment.

So, we set out to create a piece of learning that would give HCPs a chance to practise these conversations as many times as they wanted, in a safe space for self-reflection.

We worked closely with Kenes Group to establish a fictional patient and the challenges they’re facing, who would be representative of a typical patient a practitioner might meet. From there, we moved to develop the script that would give practitioners several opportunities to simulate a video call with the patient.

We took time to carefully select the ideal actor to portray the patient for the most impact and alongside Kenes Group we came up with Lucy: a patient looking for advice to manage her recently-diagnosed diabetes, while juggling parenthood and her mental health.

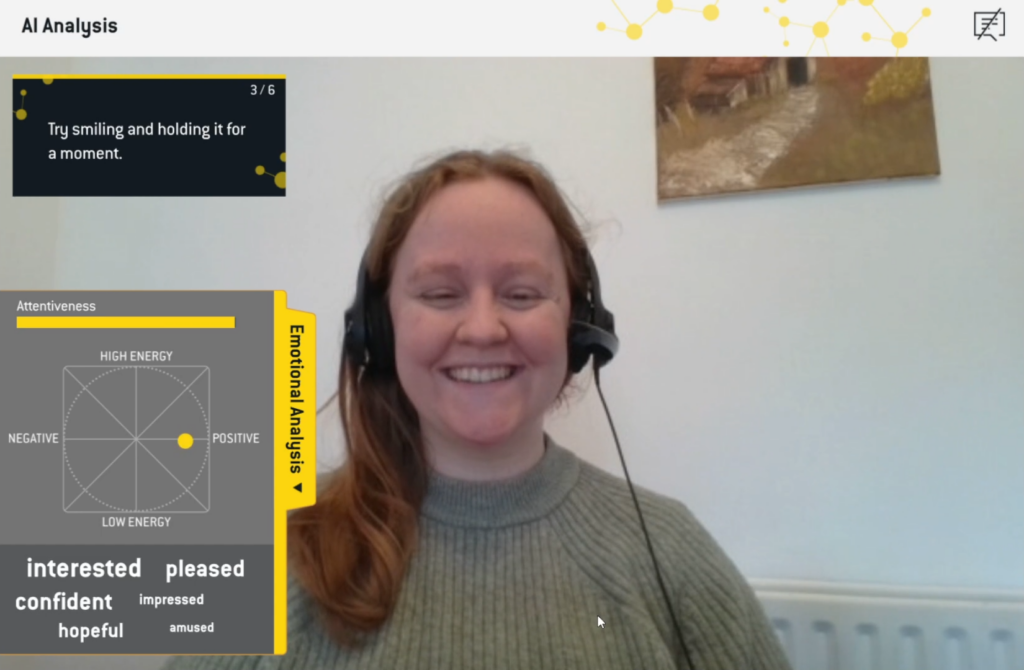

Throughout the conversation, the experience gives learners advice on what to consider in their responses, based on guidance we researched from leading organisations.

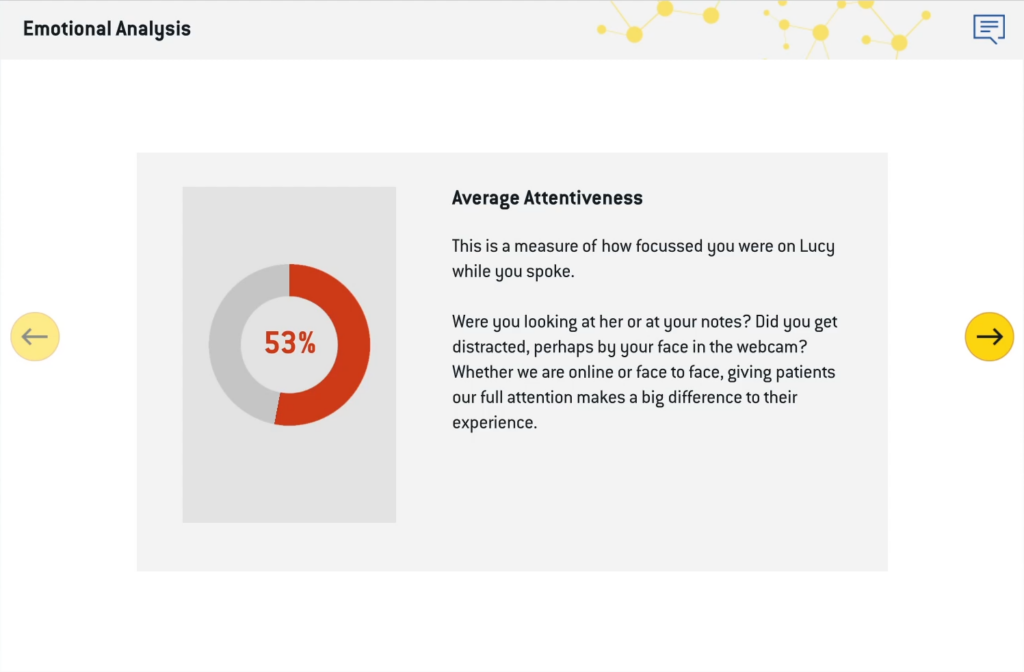

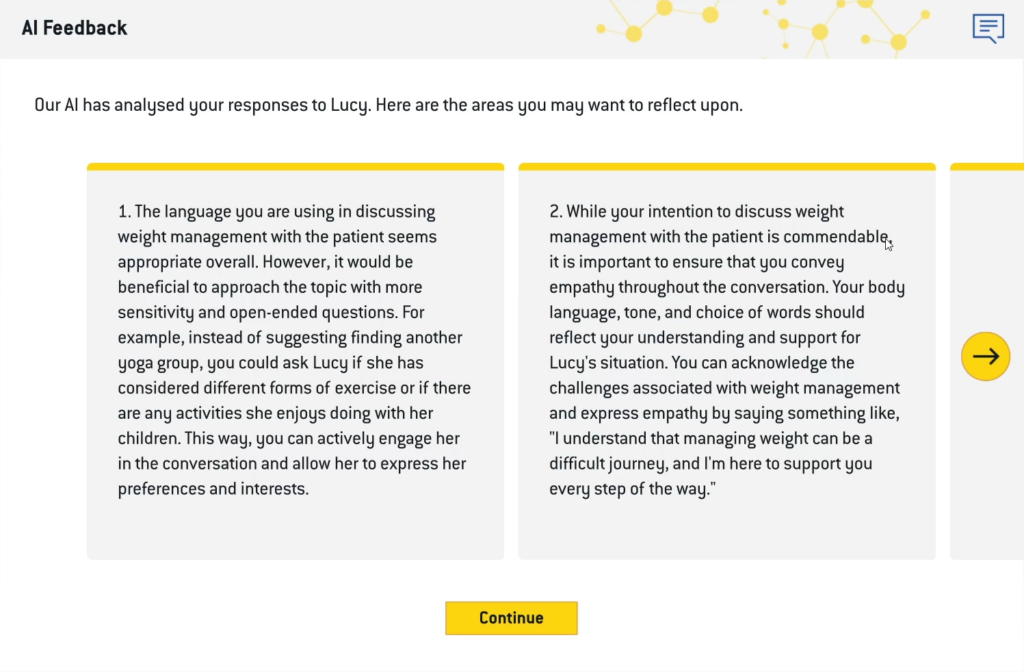

The activity analyses both the delivery and the content of what the learners says, as well as how attentive they seem when speaking with Lucy. A key point is that the activity doesn’t offer medical advice, or seek to tell you exactly how you’re feeling – it acts more as a mirror, to help you reflect on how you may come across to a patient.

This is the first project in which we’ve deployed AI, whether generative or emotional recognition – and we were so excited to have the opportunity!

It also proved a valuable experience in finetuning the use of AI for the best results.

The nature of the activity means it can be expanded upon, adding new scenarios with new patients, and adding variety to the conversations that HCPs can practise.

For more on how the project came to be, check out our study with Lloyds Banking Group on turning learners into activists, and how that evolved into our emotional recognition proof-of-concept.

We’re always happy to talk to you about how immersive technologies can engage your employees and customers. If you have a learning objective in mind, or simply want to know more about emerging technologies like VR, AR, or AI, send us a message and we’ll get back to you as soon as we can.