Here at Make Real, over the last few months, we’ve been on a journey exploring what AI has to offer the world of learning – and specifically, what diagnostic AI can do to enhance learning performance. We’re not AI experts; we’re users and practitioners, just like you. We want to demystify AI, showcase its real-world applications and cut through the hype.

We’ve shared our findings from our experiments in talks across the last few months and we thought it was time to bring them together in this blog post. But if you’d rather hear it directly from our Managing Director and Director of Learning, we’ve got a recording below. Otherwise, read on…

While the buzz has exploded around AI in the last year or so, it’s been around in various forms for decades. Below is one way of categorising them (full disclosure, ChatGPT gave us some input on this).

Generative – e.g., MidJourney

Focuses on generating new content or data such as text, images, or music, by learning patterns from existing datasets.

Diagnostic – e.g., IBM Watson Oncology

Designed to analyse and diagnose problems in various domains, such as healthcare, engineering, or finance, by leveraging machine learning algorithms and historical.

Reactive – e.g., IBM Deep Blue

Responds to specific situations based on predetermined rules. It does not learn from past experiences or predict future actions. This is the type of AI that beat a world champion at chess in 1997.

Narrow – e.g., Google Search or Siri

Also known as weak AI, this type focuses on specific tasks and operates within a limited context, like language translation or facial recognition.

Limited memory – e.g., Tesla Autopilot

Can learn from previous experiences and data, but their memory is limited, and they are not capable of retaining knowledge indefinitely.

General – e.g., ChatGPT (sort of)

Also known as strong AI, it aims to possess human-like intelligence and perform any intellectual task a human can do. While we tend to think of it purely as generative AI, you can have a conversation with it. (So far, it’s the closest we’ve seen AI come to passing the Turing test – we’ll let you explore that particular rabbit hole yourself!)

Theory of mind – e.g., Kismet Robot, MIT

Still in the research phase. Aims to understand and interpret human emotions, beliefs, and intentions to interact more effectively with humans.

Self-aware – none as yet!

A hypothetical AI type that would possess self-awareness and consciousness, allowing it to make decisions and interact with its environment.

Many of us have already put generative AI such as MidJourney and ChatGPT’s content creation tools to use. In the recorded presentation and in the image below, many of the images and icons were created by MidJourney. And indeed, we used ChatGPT to lend a hand in structuring the presentation (and this blog) – helping us overcome the tyranny of the blank page.

An animated gif showing someone using ChatGPT to create a presentation outline.

An animated gif showing someone creating icons using the Midjourney image generator AI.

By using this type of AI to give us a jumping off point, or speed up otherwise tedious processes, we can use the time saved to on more valuable tasks. For example, using AI to build out a presentation, and using the extra time on deeper research.

What we’ve been more interested by is diagnostic AI and how it can analyse things such as the tone of what we’re saying, or our facial expressions, to measure how engaged we are. And it’s easy to see where potential benefits might be for learning and development.

Unsurprisingly, we asked an AI – two, in fact:

ChatGPT:

Google Bard

From a learner’s perspective, improved performance is probably the most interesting of these. After all, that is the goal of any kind of learning one might undertake. And this is where emotional recognition AI comes in.

It’s already being used in many use cases: measuring classroom engagement, market research testing, interviewing new candidates especially at scale, as well as in policing and border control.

Even Disney has its own algorithm to predict how a member of the audience will react to the rest of a film after analysing their facial expressions for just ten minutes. But, this said, the AI wasn’t any more accurate than simply asking an audience member their opinion.

And it’s crucial to remember that the AI is only as good as the human coding that goes into it – and it can become a vehicle for our own biases. A 2018 study found that one algorithm was twice as likely to report black participants’ expressions as ‘angry ’, compared to white participant pulling the same expression.. So, how we measure emotion and the datasets we use to train AI are really important.

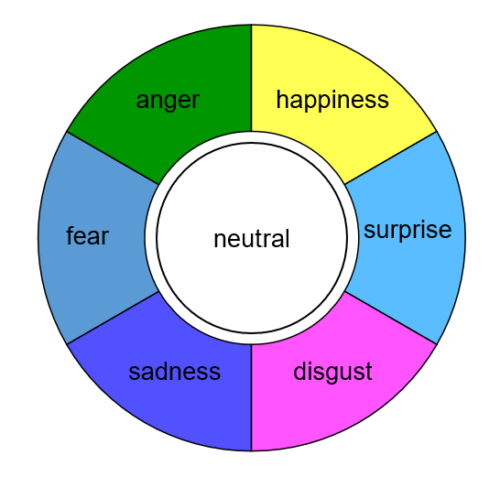

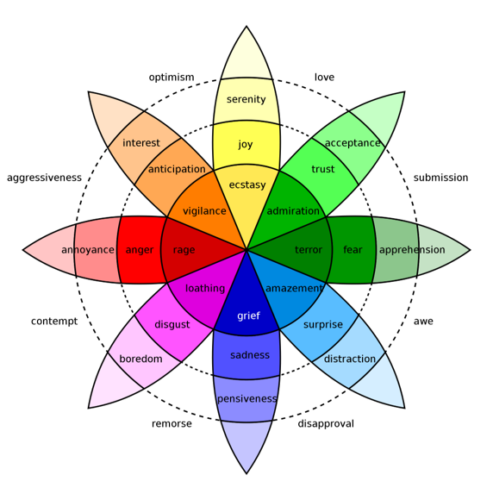

A big question – as Brené Brown puts it, quoting a neuroscientist, “there are as many theories of emotions as there are emotional theorists”. This outlines a fundamental challenge with using AI – if we can’t agree on what good looks like, how are we supposed to train a machine to do it for us? That aside, there are two main types of theory that tend to dominate emotional recognition technology:

Categorical model

Dimensional model

So, what have we done with all of the above?

As with any of our projects, whether for clients or our own internal work, we wanted to start small – and iterate.

We set out to create an app that puts you at the centre, with the technology just augmenting our human intelligence. The app would let you practise having a tough conversation, then feedback the analysis of your performance, which you can then see and act on aspects of the feedback and suggested areas for improvement. What’s key here is that it’s up to the learner to work with that feedback – they have to decide what to take on board and what feels less relevant to them.

Here’s a quick video of our Director of Learning, Sophie, demonstrating our proof of concept:

At the beginning, you get acclimatised to the analysis showing your mood based on your facial expression. We came up with a prompt of delivering bad news to your manager – as you begin, the analysis disappears so you can concentrate on delivering the message. Finally, the app gives you an overview of your dominant mood, energy, attentiveness and dominant emotions. While we used a prompt for this particular demo we’ve shared at events, you could practise any kind of conversation you like.

We ran a small study among the Make Real studio, getting an idea of how it would work with a variety of participants – and how factors like accents or facial hair affect the results.

We found it was roughly 10% less accurate than a human at detecting emotions (academic studies find the AI to be about 20% less accurate). But the affordance of the AI is that can act as a kind of always-on, private coach, allowing learners to build their confidence in a safe space

We’re looking into how we can integrate it with other tools, such as ChatGPT. We’d take a transcript of what you’ve said and from there we could do a few things with ChatGPT. We can:

We’ve come up with a few things to bear in mind when you get started. Overall, our best tip is – just try it! There’s nothing quite like learning by doing and that’s very much the case with these AI tools.

If you have any questions about anything above, please do get in touch with us. We’d love to discuss the work we’ve been doing – and we’re keen to hear if you’ve got any use cases in mind too.

We’re always happy to talk to you about how immersive technologies can engage your employees and customers. If you have a learning objective in mind, or simply want to know more about emerging technologies like VR, AR, or AI, send us a message and we’ll get back to you as soon as we can.