Create a narrative experience utilising immersive technologies in ways that will drive audience adoption forward through engagement and innovation, whilst enabling venues to operate a performance model that would allow them to generate actual revenue, rather than relying upon funding commissions to show immersive experiences.

CreativeXR is funded by Digital Catapult and Arts Council England, aiming to give creative talent the opportunity to experiment with immersive technologies to create new experiences that inspire audiences.

Focused on the creative industries, particularly the arts and culture sector, the programme gives the best creative teams the opportunity to develop concepts and prototypes of immersive content (virtual, augmented and mixed reality).

The programme offers access to early stage finance, facilities, industry leaders and commissioning bodies with the opportunity to pitch for further development funding.

Working with local theatre venue, The Old Market, Make Real offered technical capabilities to create an immersive theatre prototype blending VR and AR within a shared space.

In order to reach a more mainstream audience beyond traditional theatre-goers, Damien Goodwin (known for Teachers, Hollyoaks and TOWIE), was brought on-board to craft the narrative concept, based upon the popular story of H.G. Wells’ “Time Machine”.

The Make Real design team worked with Damien to create a series of narrative interactions, exploring collaboration in a shared-space between VR and AR audience members, acting as time travellers and guardians respectively.

Using branching narratives, based upon audience performance and decisions made together, 8 alternative outcomes to the storyline were created to encourage replay and depth of experience.

The initial development saw 4 Oculus Quest VR devices configured to operate within a co-located shared space, so that each time traveller audience member could interact within the same physical space, whilst ensuring virtual avatar representations ensured that unintended and unwanted contact was not made between users.

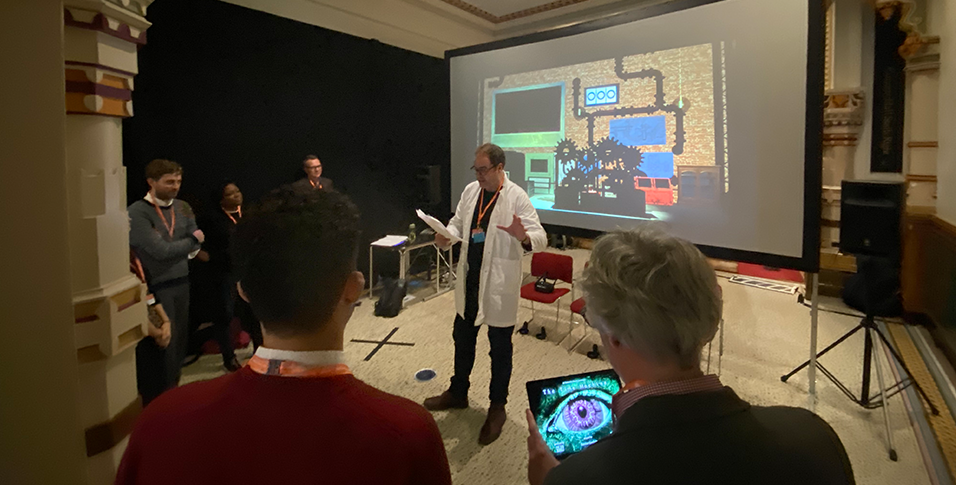

The guardian audience members used one of 4 Android-based tablets to give them an AR magic window into the virtual world of the time travellers, allowing them to communicate as they went on their journey into the future to meet H.G. Wells himself.

A traditional projection screen showing 3D content in 2D was utilised to ground the space for all audience members when not using devices, and to receive their final reckoning depending upon their performance and decisions made.

Initially this was synchronised and controlled via a tablet, connected to a Windows-based PC on the same local network. The project was later accepted as part of the Digital Catapult 5G Testbed Accelerator Programme, which saw the data and synchronisation servers become remote to the venue of performance, operating over the 5G network.

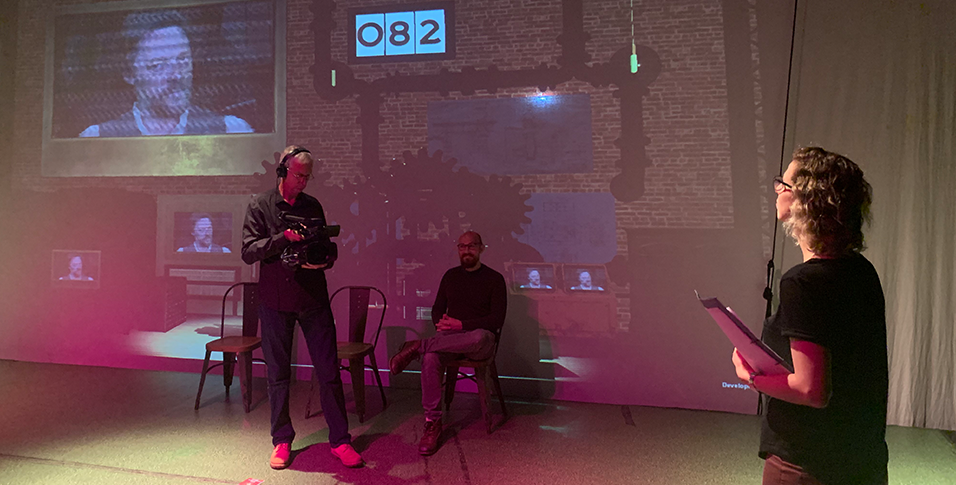

The final piece of hardware was a solo actor / stage tech hand who drove the narrative performance, guided the audience members into their devices and provided ad-lib interjections as needed. For the prototype user tests, CreativeXR pitch and marketplace day, we were joined by Katy Schutte (Knightmare Live) to play Filby, Head of IT.

H.G.Wells, played by Nicholas Boulton (Game of Thrones, Shakespeare in Love) who was volumetrically captured using the DoubleMe system at the Brighton Digital Catapult Immersive Lab. This uses two Microsoft Kinect 2 depth cameras to create a point cloud array animation file. This was then taken into Unity and rendered as a low-resolution hologram of the character.

The Oculus Quest co-location shared space capability had to be created from scratch since there are no official SDKs or support for such features currently, achieved by configuring each headset to a physical position within a known volumetric space, with each device co-ordinates being correlated across the network to update the 3D avatar representations accordingly.

The underlying co-location platform was also utilised for the AR tablet devices so the magic window affect correlated to what the guardian users could see around the tablet and the virtual world.

The 2D projection content was also created in Unity and compiled as a Windows-PC application connected to the projector.

Control and synchronisation on the Android tablet drove the VR, AR and projected applications to ensure that each scene was shown together, and could be advanced as necessary. This was all via a connected local Photon server application served via WiFi.

The 25 minute experience was showcased at The Old Market, Digital Catapult (London), Digital Catapult (Brighton) and The Brighton Dome. As a result, a number of 5G mobile network operators have shown an interest in how the concept can be taken further to encompass greater bandwidth, lower latency and more devices connected to a single network access point, to demonstrate and deliver the promises of the technology to reach true theatre-scale deployments.

If you would like to find out more about how collaborative shared-space experiences can enhance immersive learning and training scenarios, get in touch below.

We’re always happy to talk to you about how immersive technologies can engage your employees and customers. If you have a learning objective in mind, or simply want to know more about emerging technologies like VR, AR, or AI, send us a message and we’ll get back to you as soon as we can.